Anthropic Reverses Privacy Stance, Will Use Claude Chats for AI Training

Anthropic will now use consumer chats from its Claude AI for model training, a policy shift requiring users to actively opt out.

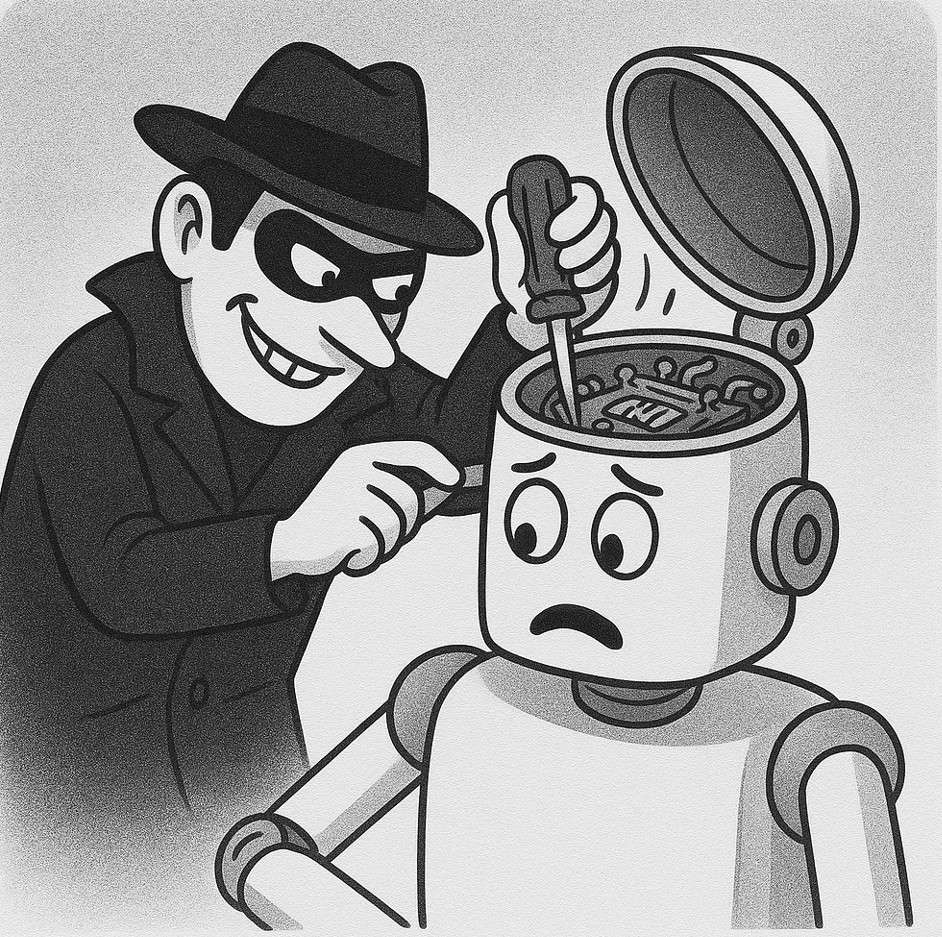

Anthropic, the AI firm behind the Claude chatbot, is walking back its foundational commitment to user privacy by announcing it will now use consumer chat logs to train its artificial intelligence models. This controversial policy pivot abandons a key principle that distinguished the company from its rivals, shifting the burden of privacy protection onto its users through an opt-out system.

The company, which had built its reputation as a more ethical and privacy-focused alternative in the AI space, will now, by default, incorporate conversations from its individual users on Free, Pro, and Max plans into its training datasets. Users are being presented with in-app notifications and have until September 28, 2025, to make a choice; otherwise, their continued use of the service will be contingent on accepting the new terms.

Further fueling the debate is a massive extension of the data retention period. For individuals who permit their chats to be used, Anthropic will now store their conversations for up to five years, a significant increase from the 30-day window that will remain for those who actively opt out. While the company claims this is to enhance model safety and performance, the move is widely seen as a strategic necessity to compete with data-rich rivals like Google and OpenAI.

This policy revision establishes a clear, two-tiered system of privacy. The changes do not apply to Anthropic's more lucrative enterprise, government, or API clients, who will continue to benefit from contractual guarantees that their data remains confidential and is not used for training. In effect, the conversations of ordinary users are being leveraged to develop and refine the core technology that is then sold to corporate customers under much stricter privacy protections.

The decision has been met with criticism from users who felt betrayed by the reversal, with some describing it as a classic case of a tech platform degrading its user experience for corporate benefit. By aligning with the industry's more conventional data-harvesting practices, Anthropic has traded a unique brand identity for a more powerful position in the AI arms race, leaving users to navigate the fine print to safeguard their own data.