KIMI K2 Is Released

KIMI K2, a Chinese 1-trillion-parameter "thinking agent" trained for just $4.6 million resets the AI race.

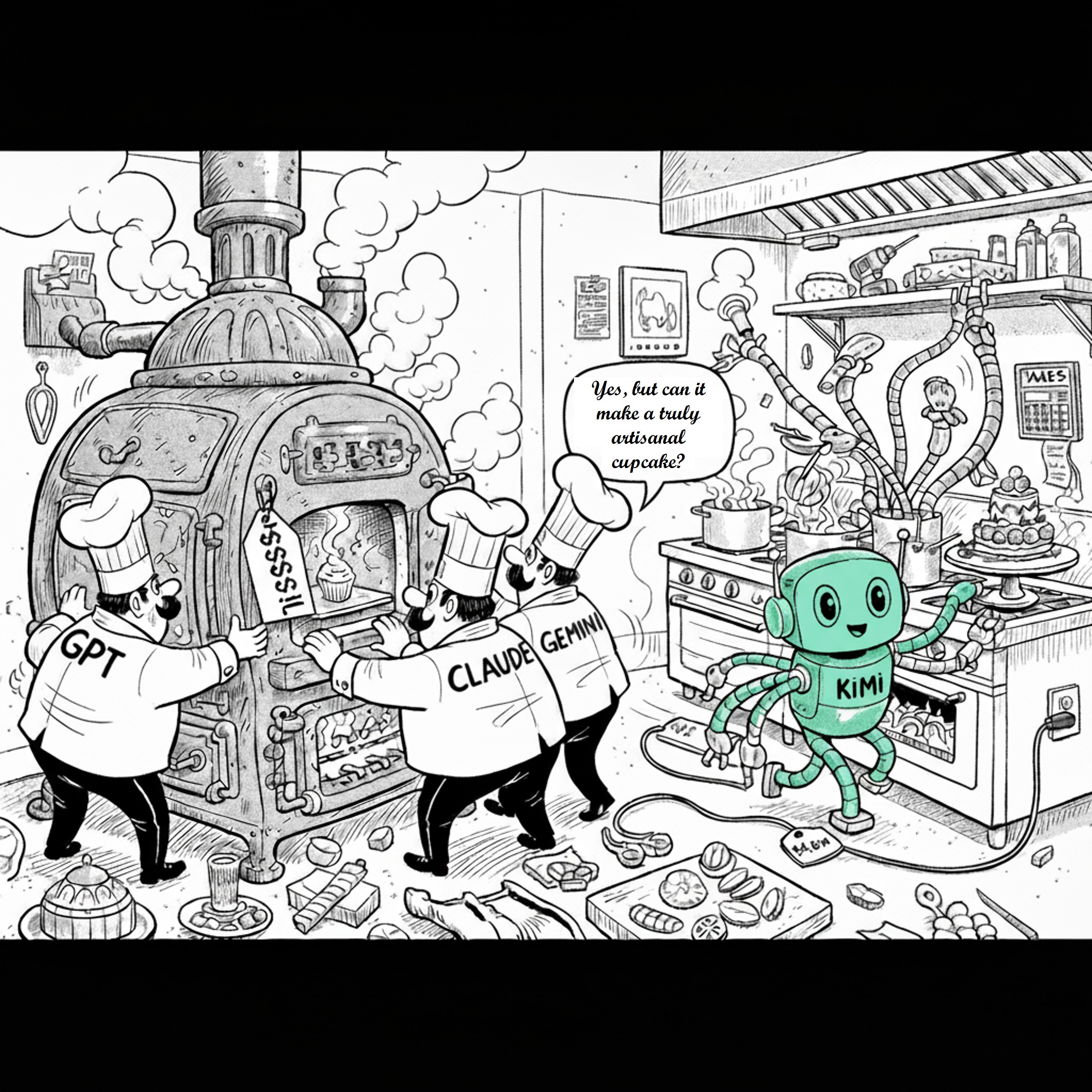

A profound shift in the artificial intelligence sector occurred this week. Beijing's Moonshot AI, identified as a key "AI Tiger," launched its Kimi K2 Thinking model on November 6, 2025. This release is not an incremental update but a new strategic direction. The open-source model is engineered as a "thinking agent," built specifically to navigate complex, sequential logic and to operate tools independently. Kimi K2 Thinking directly confronts the market leadership of proprietary Western systems, such as OpenAI's GPT-5, Anthropic's Claude 4.5, and Google's Gemini 2.5, by competing on two critical grounds: agentic functionality and cost-effectiveness.

Moonshot AI asserts it has achieved state-of-the-art (SOTA) results on agentic-focused evaluations with a 1 trillion-parameter model. The company states this was accomplished with a training budget of only $4.6 million. This cost is a small percentage of the $100 million or more associated with models like GPT-4 , implying a fundamental change in the economics of AI development. Deeper investigation, however, portrays Kimi K2 Thinking as a "rough diamond". Its comprehensive, all-purpose skill, as judged by user-preference platforms like the LMSys Chatbot Arena, is not yet on par with its polished competitors. This deficit is linked to "engineering and ecosystem integration" challenges.

Kimi K2 Deconstructed: Architecture on a Budget

Kimi K2 Thinking is the latest specialized version in a series that also features the Kimi-K2-Base and Kimi-K2-Instruct models. The model's architecture is a 1.04 trillion-parameter Mixture-of-Experts (MoE) system, activating 32 billion parameters for any given token. It supports a 256K token context window and employs native INT4 quantization to improve deployment speed. The training process itself is noteworthy. Pre-training involved 15.5 trillion tokens and utilized an innovative MuonClip optimizer. This optimiser features a mechanism to enhance stability. Moonshot AI claims this enabled them to complete the pre-training process with "zero training instability". Such stability at the algorithmic level is the primary factor behind the model's disruptively low training cost.

The "Agentic Intelligence" Paradigm

Kimi K2 signifies a core strategic focus on "Agentic Intelligence," which prioritizes moving from simply providing "answers" to actively performing "actions". The model's most prominent capability is its power to run "up to 200–300 sequential tool calls without human interference". This capacity represents a major advancement, as previous models were reported to fail after 30-50 steps, and it enables the true delegation of complex tasks. This targeted training has produced SOTA claims on specific agentic tests. Moonshot's published data shows Kimi K2 Thinking outperforming GPT-5 on Humanity's Last Exam (44.9% to 41.7%) and BrowseComp (60.2% to 54.9%). The model also leads on agentic coding metrics such as SWE-Bench Verified (71.3%).

Strategic & Economic Impact

Moonshot's "Modified MIT License" is a clever market strategy. The model is available as open-source, with one major exception for large-scale commercial use. If a service using the model surpasses 100 million monthly active users (MAU) or $20 million in monthly revenue, it must "prominently display 'Kimi K2'" on its interface. This unique "brand royalty" term fosters widespread community adoption while ensuring a commercial upside.