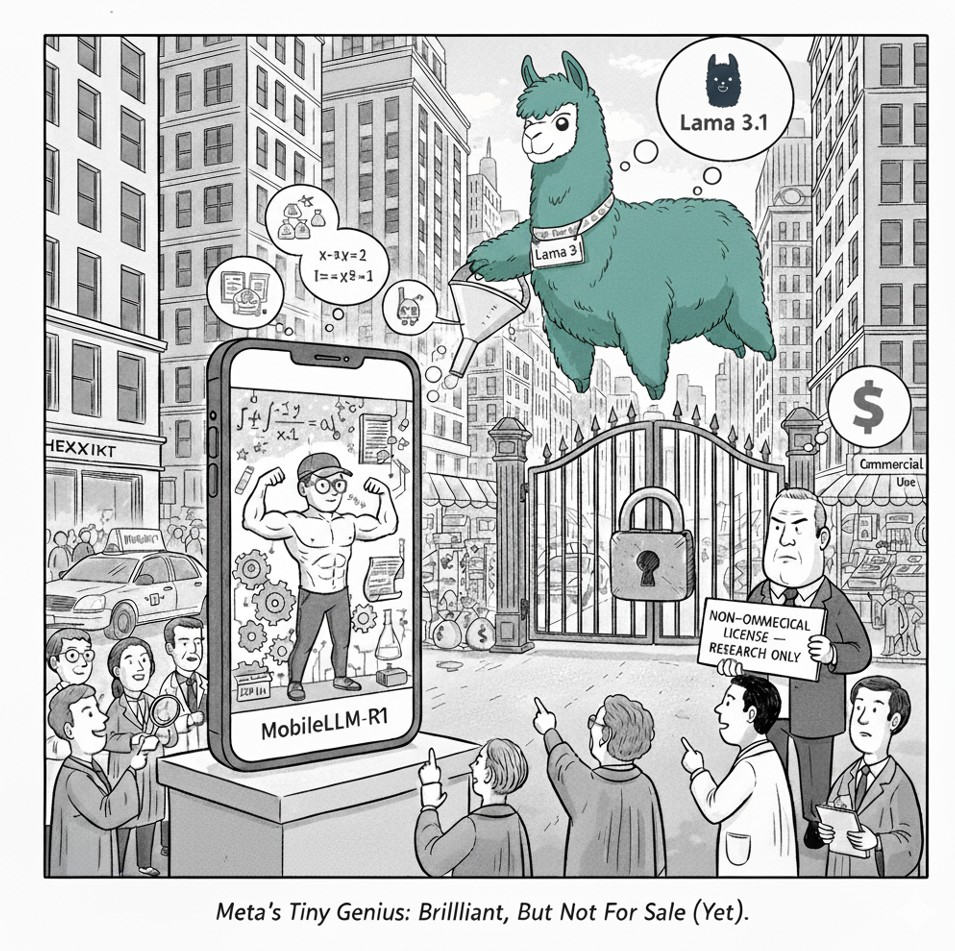

Meta Unveiled MobileLLM-R1

MobileLLM-R1 sets a new standard for on-device AI, yet its restrictive license reveals a calculated strategy to separate open research from commercial application.

Meta's research division has unveiled MobileLLM-R1, a series of compact language models engineered for high-performance reasoning on devices like smartphones. This AI is not a general-purpose chatbot but a specialist, fine-tuned for complex tasks in mathematics, coding, and scientific logic.

The model's primary innovation is its exceptional efficiency. The largest version, with 950 million parameters, achieves performance on par with or even better than competing models that required up to nine times more data for training. This leap in efficiency stems from clever architectural design and a multi-stage training regimen that includes knowledge transfer from a much larger model, Llama 3.1.

Despite its strengths, MobileLLM-R1 has clear limitations. Its specialization in logic means it struggles with creative or conversational tasks. However, the most significant barrier to its widespread use is its restrictive non-commercial license, which prohibits its integration into any revenue-generating products and confines its application to academic and research settings.

This release signals a deliberate strategy from Meta, distinguishing its commercially-friendly Llama models from foundational research projects. MobileLLM-R1 serves as a powerful proof-of-concept, challenging the industry's reliance on massive scale by showing that smaller, highly-efficient models can lead the field in specialized domains. While it represents a significant technical breakthrough for the future of on-device AI, its license ensures it remains a research tool rather than a market-ready product.