The AI Dilemma: Privacy vs. Harm Avoidance

OpenAI's monitoring of user chats creates a dilemma between preventing harm and protecting privacy, a conflict heightened by the risk of false positives leading to invasive interventions.

OpenAI is actively monitoring user conversations in its popular ChatGPT service for dangerous content, a policy that includes escalating certain threats to law enforcement and has ignited a fierce debate over digital privacy. The revelation, which contradicted many users' expectation of confidentiality, prompted a significant public backlash from those who felt betrayed by the perceived surveillance. The company's protocol is a nuanced, two-pronged system that treats self-harm and threats to others differently. For users expressing suicidal ideation, the AI is designed to de-escalate, offering empathic responses and directing them to crisis resources like the 988 hotline, without police involvement. However, if a conversation is flagged for containing a credible, imminent threat of physical harm to other people, it is routed to a human review team authorized to contact the authorities. This monitoring relies on AI classifiers that, while demonstrating high accuracy in academic studies, are not infallible. The risk of error is profound: a false positive could trigger a traumatic, unwarranted police intervention, while a false negative could fail to prevent a real-world tragedy. User privacy is further compromised by external legal mandates, such as a court order compelling OpenAI to preserve all chats indefinitely, which overrides any user-facing deletion options.

This situation creates a profound and unavoidable dilemma, pitting three competing values against each other. On one side is the intense public and legal pressure for platforms to ensure safety, a modern "duty to warn" against foreseeable harm. This is countered by the fundamental right to privacy, with civil liberties groups like the ACLU warning that mass-scale monitoring creates a chilling effect on free expression in a world of "robot guards". The third, often-overlooked cost is the severe psychological toll on the human moderators who must review the most traumatic content, a job linked to high rates of PTSD and anxiety.

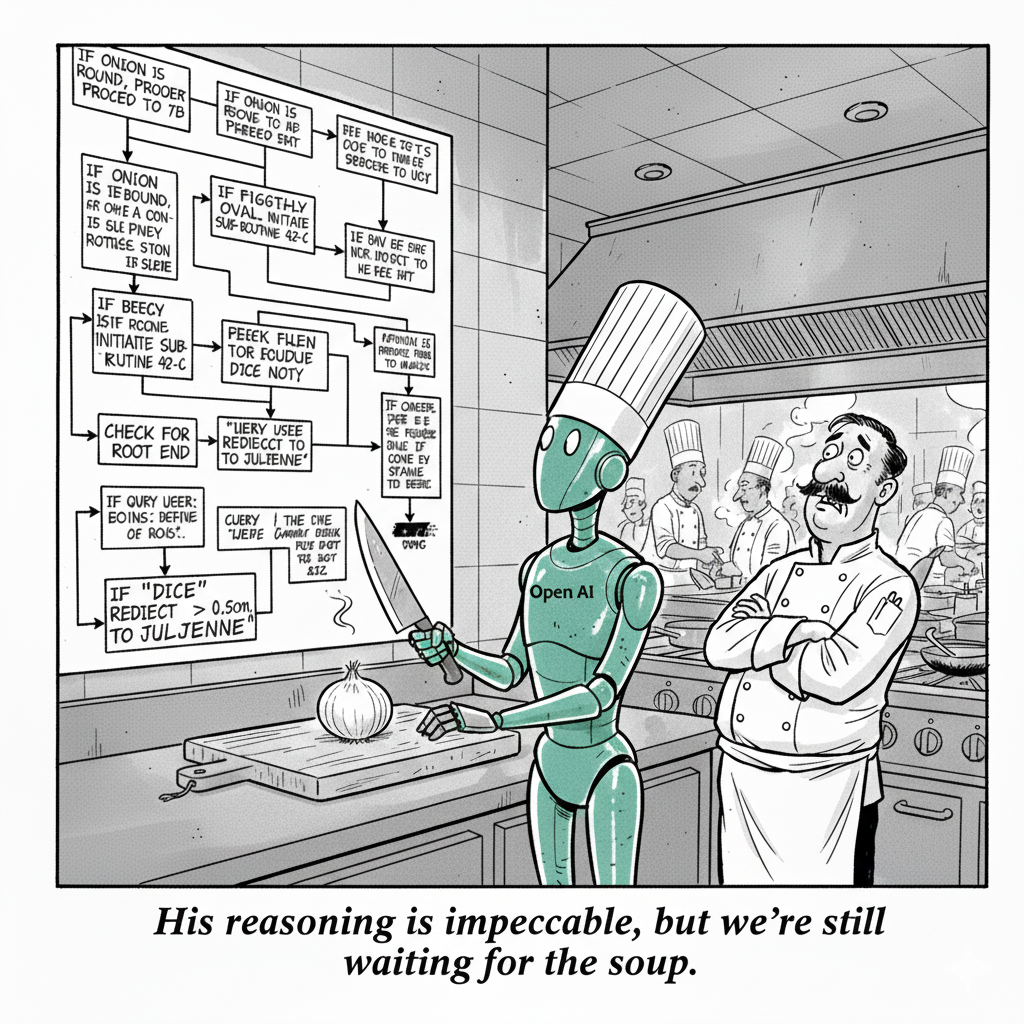

Any policy is therefore an imperfect compromise, as maximizing safety inevitably erodes privacy and harms the well-being of these hidden frontline workers. Navigating this challenge has led experts and legal scholars to propose several solutions aimed at fostering a more responsible and transparent ecosystem. These include calls for mandatory, standardized transparency reports that detail how harmful content is detected and handled, including data on false positives. Others advocate for new legal frameworks that provide clear guidelines and "safe harbor" provisions for companies that adhere to best practices, reducing legal ambiguity. Further recommendations focus on implementing robust human oversight, conducting independent audits to verify safety claims, and establishing industry-wide standards for the mental health and support of content moderators. These are not just corporate decisions but foundational acts shaping the social contract of our digital future.